Introduction

In an era where artificial intelligence (AI) and machine learning (ML) are interwoven into the fabric of everyday life, the demand for transparency in model training has surged. This need arises not just from a technical standpoint, but also from ethical, social, and legal perspectives. This article explores why transparency in model training is a public challenge, examining its implications, obstacles, and potential pathways to greater accountability.

The Importance of Transparency

Transparency refers to the clarity and openness with which processes are conducted and decisions are made. In model training, it encompasses the methodologies, data sources, and algorithms used to generate AI systems. Understanding these elements is crucial for several reasons:

- Accountability: Without transparency, it becomes challenging to hold developers and organizations accountable for the outcomes of their AI systems.

- Trust: Users and stakeholders are more likely to trust systems that are transparent about their workings.

- Ethical Standards: Transparency facilitates adherence to ethical standards by making it easier to identify biases and inequalities in AI systems.

- Informed Decision-Making: For policymakers, researchers, and the public, transparency enables informed decision-making based on a clear understanding of AI capabilities and limitations.

Historical Context

The journey towards transparency in model training is not new. In the early days of machine learning, models were often viewed as black boxes—complex systems whose internal workings were not understood even by their creators. This lack of clarity led to significant challenges, including bias in decision-making and errors in predictions. Historical incidents, such as biased hiring algorithms and discriminatory lending practices, underscored the urgent need for transparency.

Current Challenges

Despite the growing recognition of the need for transparency, several challenges hinder progress:

1. Complexity of Algorithms

Modern AI models, especially deep learning systems, involve intricate architectures and vast amounts of data, making them inherently difficult to interpret. This complexity can deter even experts from understanding how decisions are made.

2. Proprietary Interests

Many organizations view their algorithms as proprietary information, protecting them as trade secrets. This corporate secrecy can create a barrier to transparency, limiting external scrutiny and accountability.

3. Regulatory Environment

The regulatory landscape concerning AI is still evolving. Many jurisdictions lack clear guidelines that mandate transparency in model training, creating a gap between public expectations and industry practices.

4. Public Understanding

The general public often lacks a foundational understanding of AI and machine learning, which complicates discussions around transparency. This gap can lead to mistrust and skepticism towards AI systems.

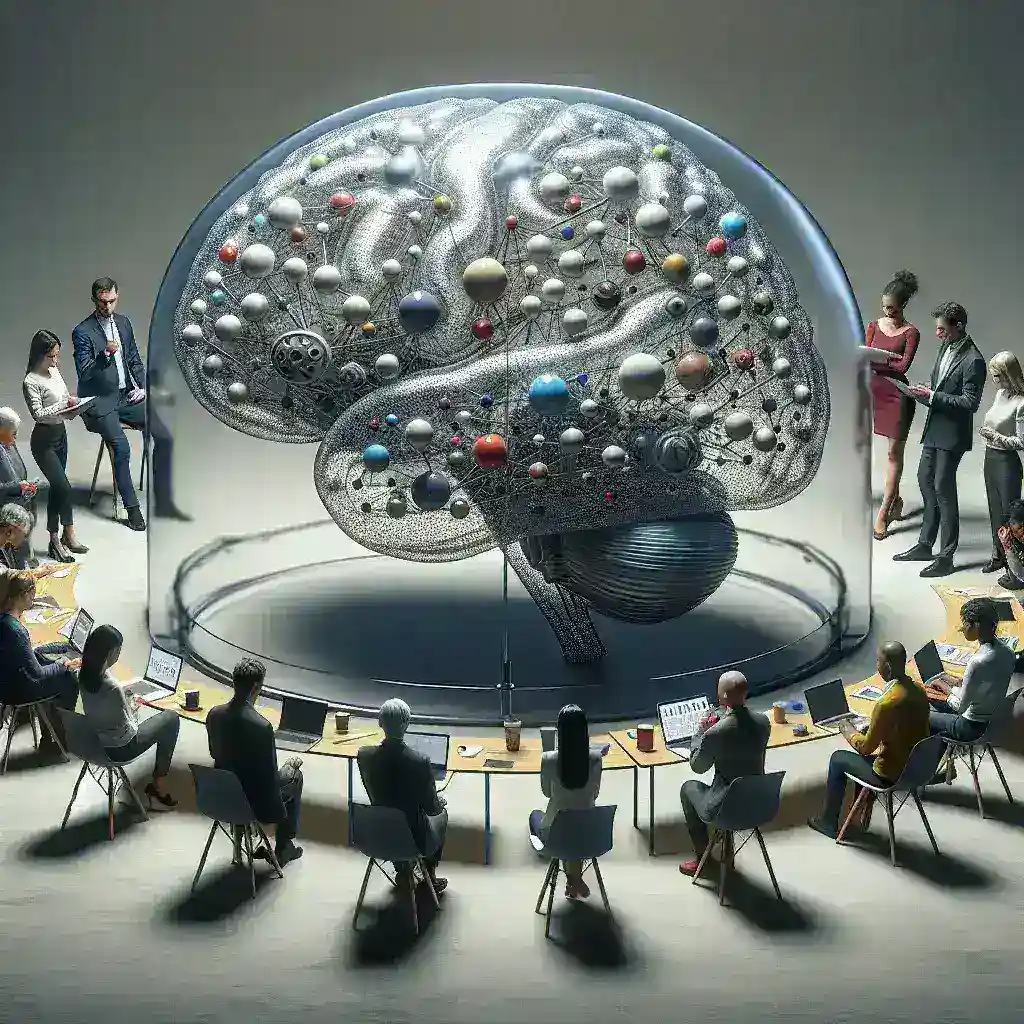

The Need for a Multi-Faceted Approach

Addressing the challenges of transparency in model training requires a multi-faceted approach:

1. Standardization of Practices

Establishing industry-wide standards for transparency can help demystify model training processes. This could include guidelines for documenting data sources, training methodologies, and model evaluations.

2. Collaborative Efforts

Collaboration between academia, industry, and policymakers is essential. By working together, these stakeholders can share knowledge, best practices, and develop regulatory frameworks that prioritize transparency.

3. Public Engagement

Engaging the public through education initiatives can enhance understanding of AI systems. This can empower individuals to participate in discussions about transparency and ethical considerations.

4. Ethical AI Frameworks

Developing ethical AI frameworks that prioritize transparency and accountability can guide organizations in their practices, helping to align their goals with public expectations.

Future Predictions

As society continues to integrate AI into various sectors, the demand for transparency will only intensify. Future developments might include:

- Legislative Action: We can anticipate more comprehensive regulations that enforce transparency in model training.

- Advancements in Explainable AI: Research in explainable AI (XAI) will likely produce models that are inherently more interpretable and user-friendly.

- Increased Public Scrutiny: The public’s growing awareness and interest in AI will result in increased scrutiny of how models are trained and deployed.

Conclusion

Transparency in model training is a significant public challenge that intersects with technological, ethical, and societal dimensions. By addressing the barriers to transparency through collaborative efforts, standardization, and public engagement, we can pave the way for a future where AI systems are accountable, trustworthy, and aligned with human values. The journey toward transparency is not merely a technical necessity; it is an imperative for building a just and equitable society.